🤖 Databricks MCP Catalog: The Missing Operational Layer for Enterprise Agents

The amount of adoption and hype around Model Context Protocol (MCP) has pleasantly surprised me lately. While I was skeptical at the start regarding its value proposition and how widely (or not) it would be adopted, I’m now seeing how it’s coming together.

The most important aspect of agents are their tools, as they give them access to your enterprise context as well as the outside world. I’m starting to see how MCP will enable more seamless agent integration with the outside world.

At Plains, we’ve fully embraced the Lakehouse architecture, and the possibilities expand dramatically when you have data from your critical applications centralized in one place. This presents some fascinating opportunities for MCP, which I’ll explore in detail below.

Databricks has been releasing lots of exciting features during their ‘Week of Agents’ campaign and I wanted to cover their recent release around the MCP catalog and marketplace. I wanted to put together a short blog post on how to get started and where I see some opportunities.

📢 Announcements

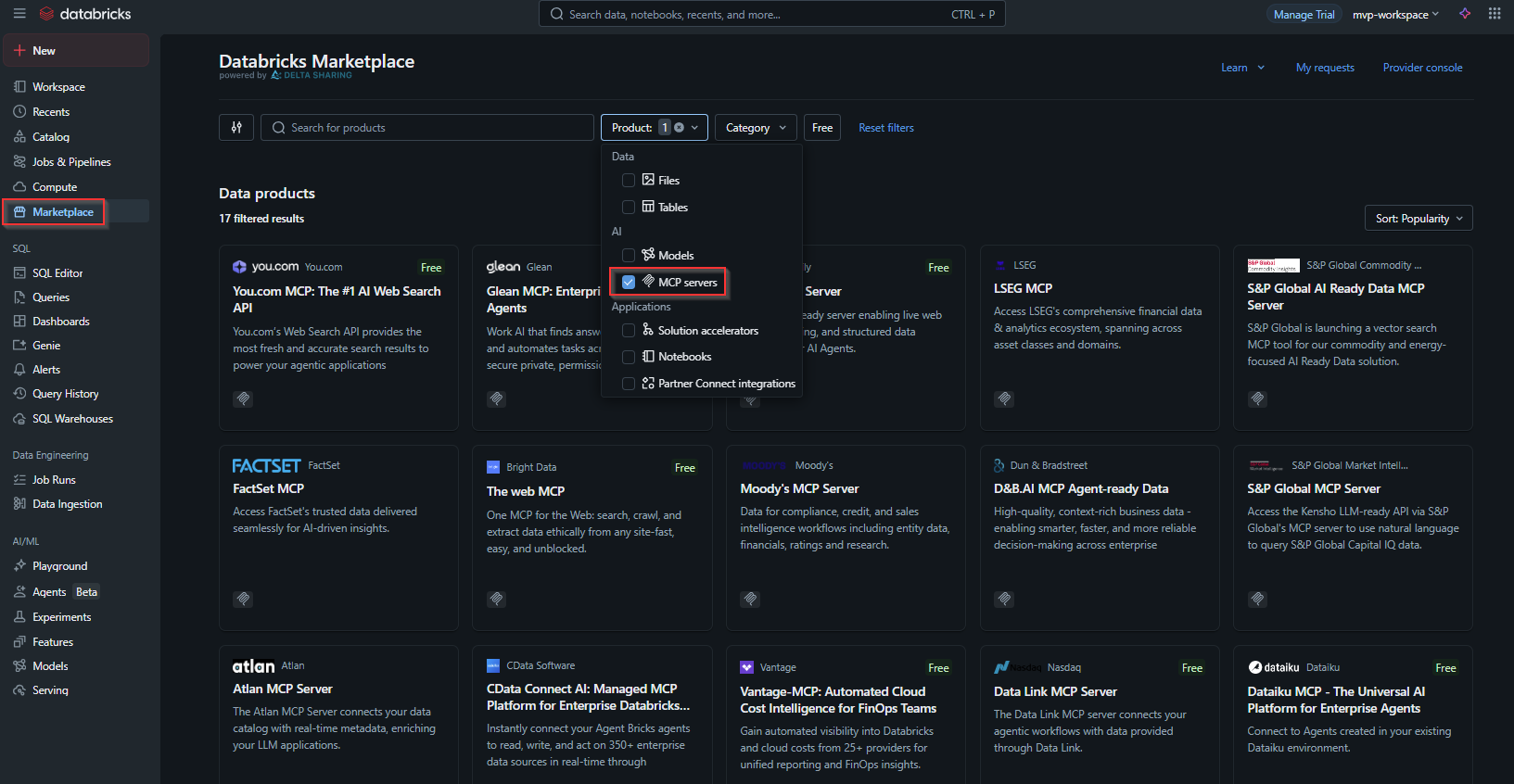

There were a few exciting announcements around MCP during this release, but it really boils down to discovery, governance, and actionability of MCP endpoints. On the discovery side, you can now explore external MCP servers via the marketplace:

There are some neat MCP integrations to S&P and Nasdaq and there are a few free ones to get started with. I will cover the easiest way to explore external MCP shortly.

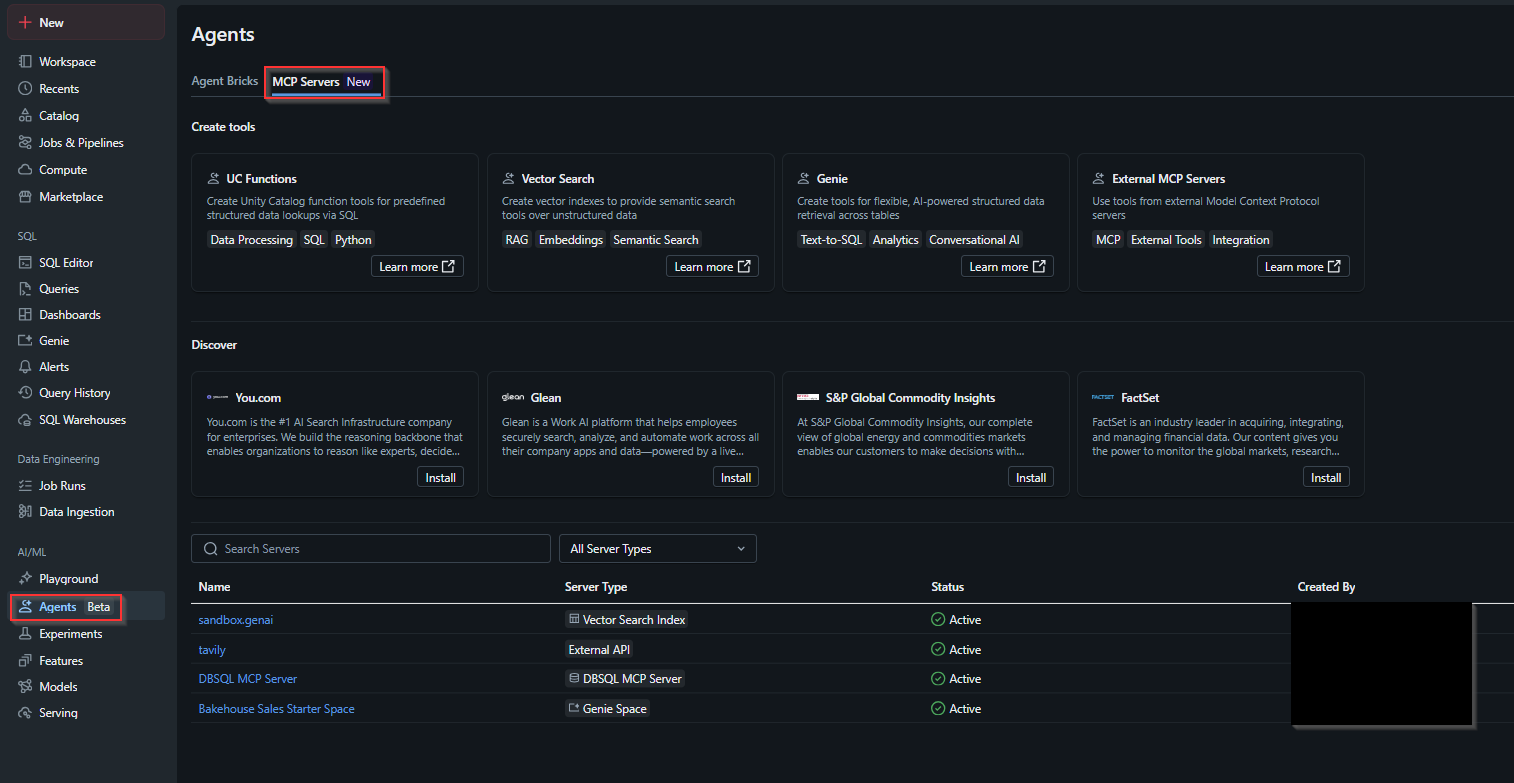

You can also more easily discover MCP servers hosted within your Databricks environment. If you think in terms of data products, discoverability is an important attribute of them, and I see MCP servers becoming products within your data and AI platform that will start to take on a lifecycle of their own and enable agents within your environment to interact with your enterprise data.

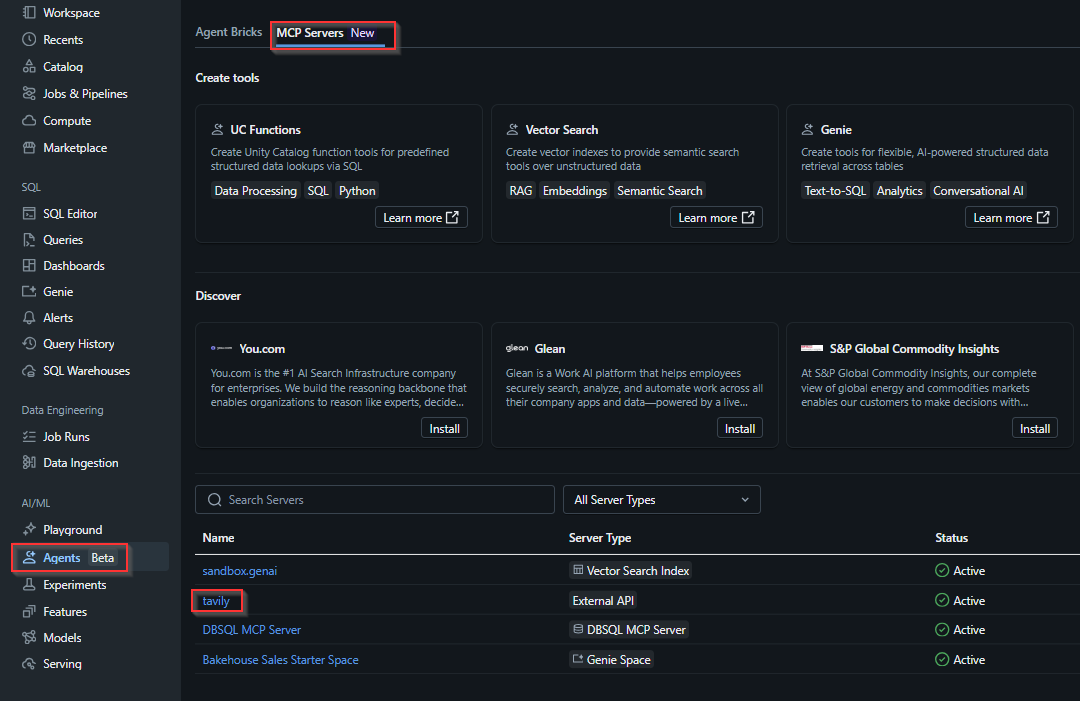

You can now explore MCP servers within your environment in the ‘Agents’ tab:

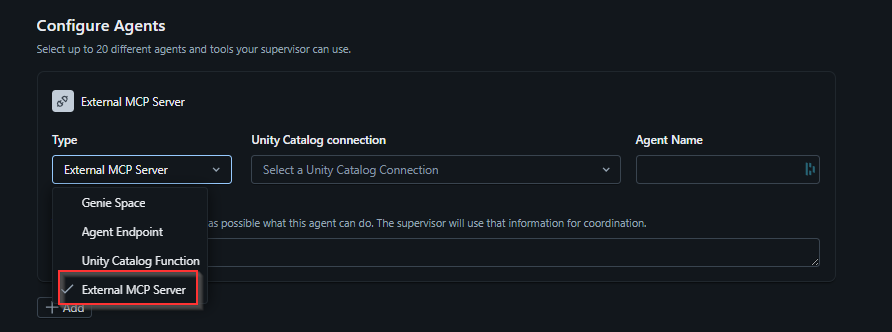

Lastly, the Multi-Agent supervisor now supports connecting to external MCP servers, giving agents access to AI-ready external data. I fully expect the offerings in this catalog to expand in the future.

🚀 Getting Started

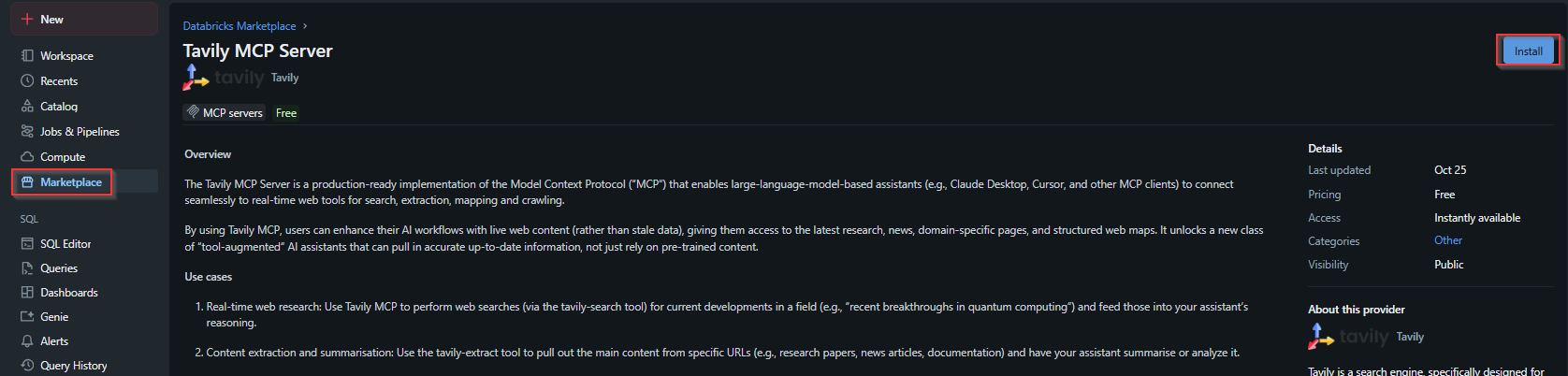

I would say the easiest way to get started with testing some of these new features is to select one of the free external MCP servers. I suggest the Tavily MCP that allows agents to hook into the web.

- Navigate to Tavily and create an account. You get 1000 free API calls a month to test out the functionality!

-

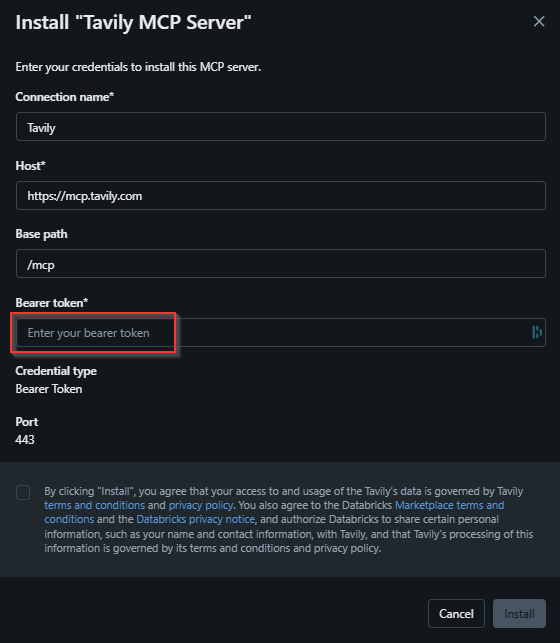

Next, navigate to the Databricks Marketplace and find the Tavily MCP server and select ‘Install’.

-

Finally, place your API key from Tavily into the bearer token input and click ‘Install’.

-

You should now be able to see your newly created Tavily MCP server in the catalog:

-

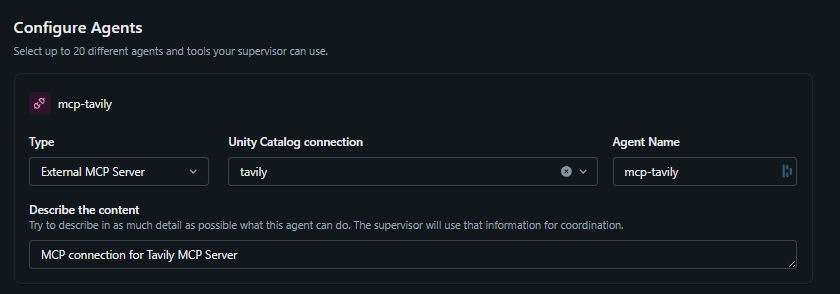

Now the fun part begins and we can create a multi-agent supervisor and give it access to the new MCP server:

-

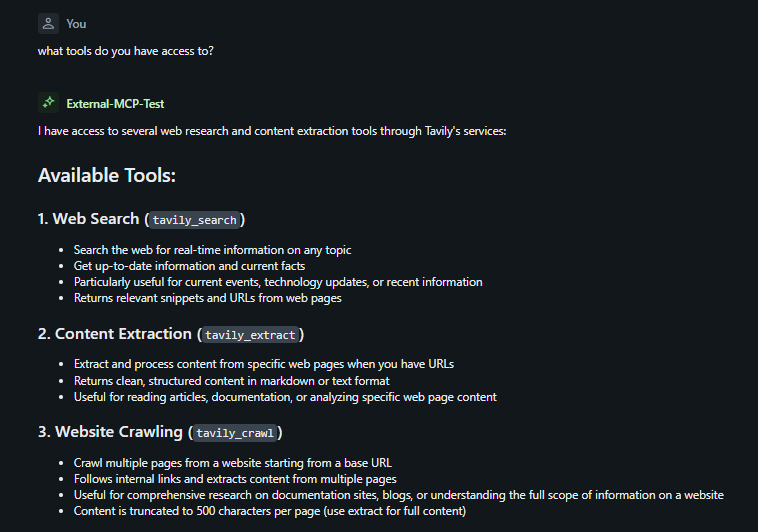

Asking the agent in the playground,

what tools do you have access to?is a good first test to make sure the agent has access to be able to interact with the Tavily MCP server: -

Let’s give the agent a run for its money and ask a tough question:

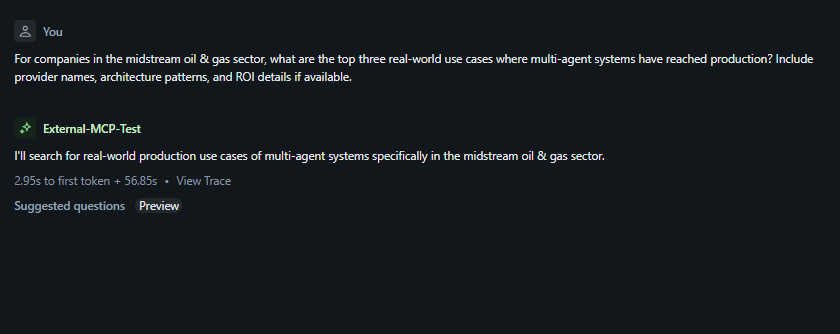

For companies in the midstream oil & gas sector, what are the top three real-world use cases where multi-agent systems have reached production? Include provider names, architecture patterns, and ROI details if available.

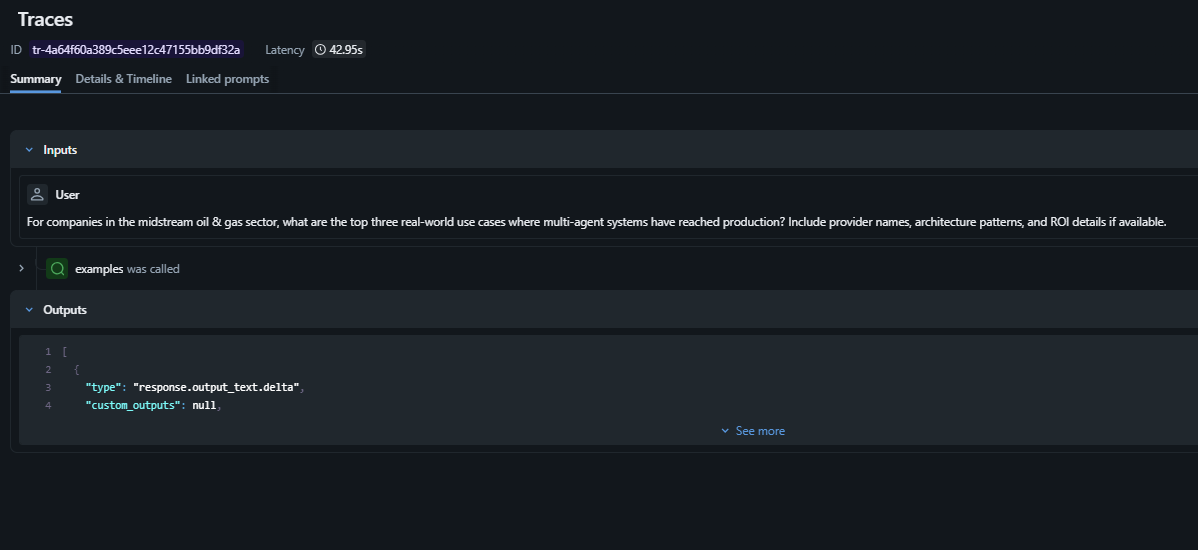

The agent seems to spin for about a minute, but no answer is returned:

The trace also does not seem to indicate that the Tavily MCP server was called; however, I can see the calls on my Tavily subscription.

-

Let’s try another question!

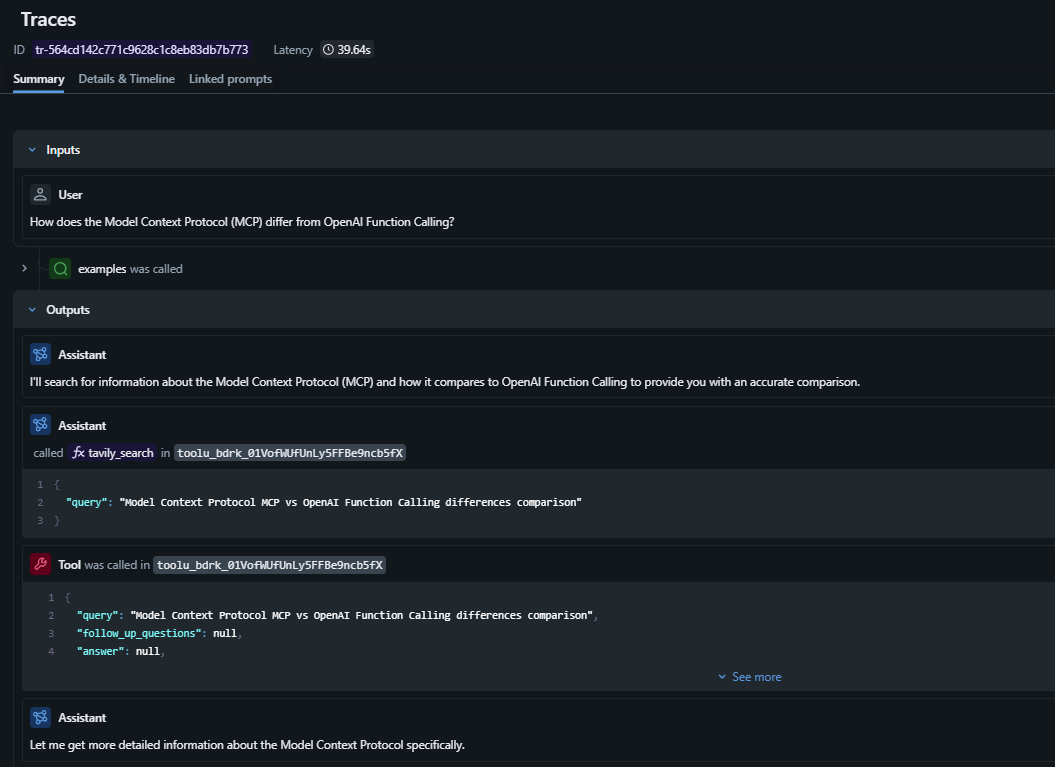

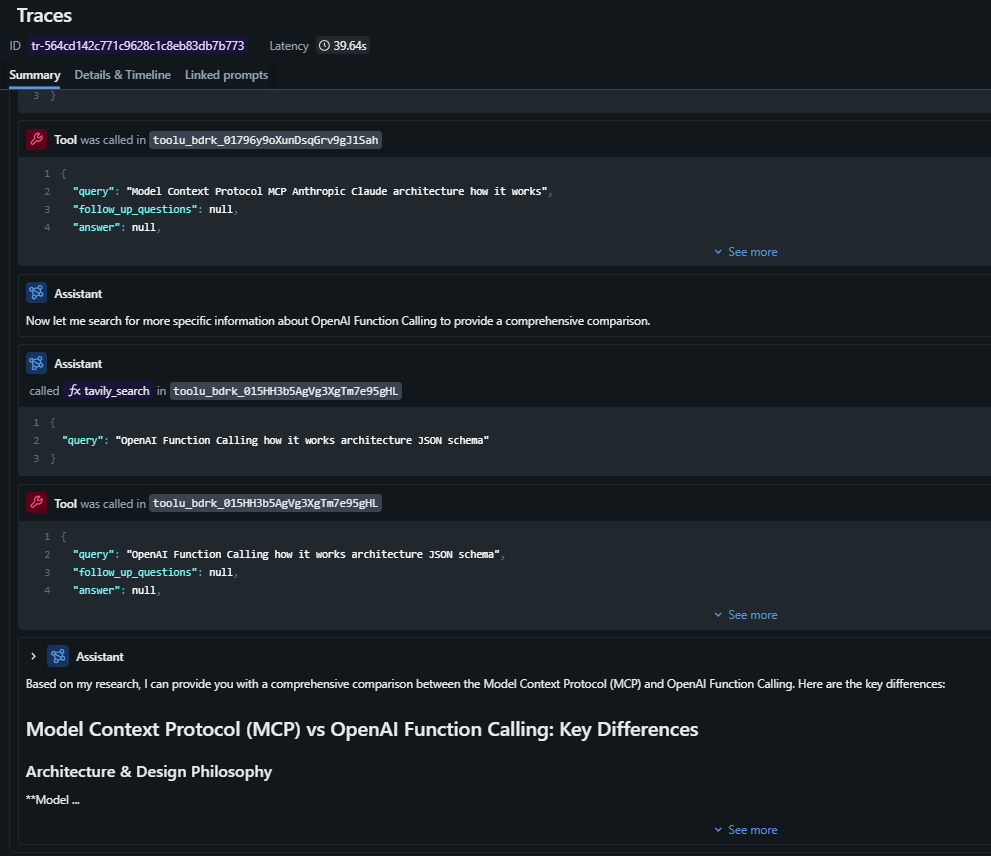

How does the Model Context Protocol (MCP) differ from OpenAI Function Calling?

This one worked really well. I can see in the MLflow trace that the Tavily MCP server was called multiple times to generate a file response:

I am also impressed with the answer. For reference, you can view the agents response to my second question and see how it did.

Note: I have converted the response to markdown for better readability.

💡 Use Case(s)

I’ll admit, some of the MCP use cases in the market right now still feel a bit aspirational. But I think that’s the point. The value comes before the use case. Getting ahead of the curve on how systems will be accessed by agents and ensuring your data is structured, cataloged, and centralized is what positions you to move fast when the patterns become clear.

Instead of waiting for the “perfect” GenAI use case to fall into our lap, the strategy is to get the prerequisites in place now:

- Data centralized

- Metadata and governance

- Services exposed consistently through something like MCP

Then iterate on use cases as the opportunities show up.

One area where this does feel tangible today is with the sensor data we collect from our pipelines. We are already well underway on a build out of an anomaly detection layer (both a rules-based engine and an ML classifier, e.g., XGBoost). But once an anomaly is flagged, an operator still has to go and validate whether it is real or explainable. This is a highly manual workflow that often requires switching between SCADA, historian data, Maximo, and inspection/maintenance logs.

An MCP-connected agent could replicate the exact steps an experienced operator takes to triage the anomaly but do it in seconds instead of minutes or hours.

The Agent’s Workflow

Once an anomaly is detected, the agent would:

- Automatically trigger from the alert

- Input comes from the classifier/rule engine

- Pass along signal metadata (location, severity, timestamp, sensor type)

- Check maintenance history via Maximo

- “Is there a work order already open for the affected equipment?”

- If yes → annotate the alert as operationally explained

- Query SCADA / historian for recent operating patterns

- Compare pressures/flows/temperatures before and after anomaly

- Detect step changes vs gradual drift vs sensor noise patterns

- Look at nearby related assets

- Sometimes upstream equipment explains downstream anomalies

- Check if other components in the same block also changed state

- Retrieve relevant inspection or ILI records

- Determine if this area has prior corrosion, fatigue, or coating damage history

-

Return a structured human friendly report

Example output:

Anomaly at Station 12 Compressor Train B. No active work order. Upstream suction pressure dropped 8% at the same timestamp, possibly due to upstream supply swings. Last ILI shows no integrity red flags. Recommend monitoring; no immediate action required.

The cool part with this potential use case is MCP standardizes how agents access enterprise systems, and with MCP servers discoverable via the MCP catalog, other agent builders can tie into existing functionality.

🎯 Conclusion

The introduction of the MCP catalog and marketplace represents a significant step forward in making enterprise AI agents more accessible and operational. By providing a centralized hub for discovering, managing, and utilizing MCP servers, Databricks is addressing key challenges in enterprise agent development. As the ecosystem grows and more organizations contribute their MCP servers, we’ll likely see an acceleration in the adoption of agent-based solutions across industries.

It would be really neat if eventually these MCP servers were also exposed in Databricks One for the business to discover agents and the tools they can access.

These features are still in beta, so it is fully expected to run into the issue we saw in the ‘Getting Started’ section where the agent did not reply at all. I am excited to see where this goes next.

Thanks for reading! 🙂